How Databricks Supports Multi-Cloud & Global Data Strategies

The Reality of Multi-Cloud Is No Longer Optional

Introduction:

A few years ago, “multi-cloud” was often a theoretical discussion—something enterprises debated but rarely implemented in full. Today, it is a practical reality.

Global enterprises operate across:

- Multiple geographies

- Different regulatory environments

- Diverse business units with varying cloud preferences

- Mergers and acquisitions that bring inherited cloud stacks

As a result, data strategies must now function across AWS, Azure, and Google Cloud, while ensuring governance, performance, and cost control at scale.

This is where Databricks has emerged as a critical enabler. Designed as a cloud-agnostic, open, and unified data platform, Databricks allows enterprises to build and operate consistent global data and AI strategies across clouds, without fragmenting teams or duplicating effort.

This blog explores how Databricks supports multi-cloud and global data strategies, and why enterprises increasingly view it as the foundation for modern, distributed data ecosystems.

The Challenges of Multi-Cloud & Global Data Environments

Before understanding how Databricks helps, it’s important to understand the problems enterprises face.

1. Fragmented Data Platforms

Different regions often use different cloud services:

- AWS in North America

- Azure in Europe

- GCP in APAC

This leads to siloed data platforms, inconsistent tooling, and duplicated pipelines.

2. Regulatory and Data Residency Constraints

Laws like GDPR, DPDP, HIPAA, and industry regulations often require:

- Data to stay within a country or region

- Strict access controls and auditing

- Clear lineage and governance

3. Operational Complexity

Running separate data stacks across clouds means:

- Multiple skill sets

- Higher operational overhead

- Slower innovation cycles

4. Inconsistent Analytics & AI Capabilities

Advanced analytics and AI often become centralized in one cloud, while other regions lag behind due to platform limitations.

A successful global data strategy must address all of these challenges simultaneously.

Databricks’ Core Advantage: A Cloud-Agnostic Lakehouse Platform

At the heart of Databricks’ multi-cloud strength is its Lakehouse architecture, which combines the best of data lakes and data warehouses using open technologies.

Key Principles That Enable Multi-Cloud

- Open formats (Delta Lake, Parquet)

- Cloud-native deployments on AWS, Azure, and GCP

- Separation of compute and storage

- Consistent APIs and tooling across clouds

This design allows organizations to deploy Databricks in any cloud, while maintaining a consistent data and AI experience globally.

Running Databricks Across AWS, Azure & GCP

Databricks offers first-class, deeply integrated deployments on all major cloud platforms:

- Databricks on AWS

- Azure Databricks

- Databricks on Google Cloud

Each deployment integrates natively with the underlying cloud’s:

- Object storage (S3, ADLS, GCS)

- Identity and access management

- Networking and security services

Yet, from a user and platform perspective, the experience remains consistent.

Why This Matters

- Data engineers can reuse the same pipelines

- Data scientists can use the same ML workflows

- BI users get uniform analytics capabilities

- Governance models stay consistent

Multi-cloud no longer means “multiple platforms to manage”—it becomes one logical platform across clouds.

Enabling Global Data Strategies with Regional Autonomy

A common misconception is that global data strategies require centralization. In reality, modern enterprises need a federated model.

Databricks Supports Federated Data Architectures

With Databricks, organizations can:

- Deploy regional workspaces for compliance

- Keep data local to meet residency laws

- Share governance policies centrally

- Standardize data models and pipelines

For example:

- European customer data remains in EU regions

- APAC operational data stays local

- Global analytics models are standardized and reusable

This balance between local autonomy and global consistency is critical for scale.

Unity Catalog: The Backbone of Global Governance

Governance is often the biggest blocker to global data strategies. Databricks addresses this with Unity Catalog.

What Unity Catalog Enables

- Centralized metadata management across workspaces

- Fine-grained access controls (row, column, table level)

- Built-in data lineage and auditing

- Governance across data, ML models, and features

Why It’s Powerful for Multi-Cloud

Unity Catalog allows enterprises to:

- Apply consistent governance policies across regions

- Maintain visibility into who is accessing what data

- Simplify compliance reporting

- Reduce risk without slowing innovation

Governance becomes embedded, not enforced through manual processes.

Cross-Region & Cross-Team Data Sharing

In global enterprises, data sharing is unavoidable—but often risky.

Databricks enables secure, governed data sharing without data duplication.

Delta Sharing: Secure Data Sharing at Scale

With Delta Sharing, organizations can:

- Share live data across regions and clouds

- Avoid copying or exporting data

- Control access dynamically

- Share data with partners or internal teams

This supports use cases like:

- Global reporting

- Cross-border analytics

- Partner ecosystems

- M&A integration

All while maintaining full governance and auditability.

Supporting Global Analytics & BI at Scale

Databricks is not just an engineering platform—it’s increasingly used for enterprise analytics.

Global BI Enablement

- Databricks SQL Warehouses provide fast, scalable analytics

- Integration with Power BI, Tableau, Looker, and more

- Consistent semantic layers across regions

- Optimized performance using Delta Lake

This allows global business teams to:

- Access trusted data

- Use familiar BI tools

- Operate with consistent metrics

No matter where the data resides.

Multi-Cloud AI & Machine Learning Enablement

AI initiatives often fail when platforms are inconsistent across regions.

Databricks solves this by providing:

- Unified ML workflows across clouds

- MLflow for experiment tracking and model registry

- Feature Store for reusable features

- Support for real-time and batch ML

GenAI & Advanced AI Across Clouds

With Mosaic AI and native LLM support, Databricks enables:

- Training and fine-tuning models in-region

- Deploying models close to data sources

- Meeting latency and compliance requirements

This is critical for global AI strategies where data cannot be centralized.

Cost Optimization in a Multi-Cloud World

Cost management becomes more complex in multi-cloud environments.

Databricks provides:

- Transparent, usage-based pricing

- Independent scaling of compute and storage

- Monitoring tools for workload optimization

- Ability to leverage cloud-specific cost benefits

Organizations gain financial control without sacrificing flexibility.

Supporting Global Operating Models & GCCs

Many enterprises operate Global Capability Centers (GCCs) or distributed data teams.

Databricks supports this model by:

- Enabling shared platforms across locations

- Allowing teams to collaborate on the same data

- Supporting “follow-the-sun” development

- Standardizing tools and best practices

This accelerates delivery while reducing duplication and skill silos.

Real-World Scenarios Where Databricks Excels

Scenario 1: Global BFSI Enterprise

- Strict data residency requirements

- Advanced risk and fraud analytics

- Multiple clouds across regions Databricks enables local compliance + global analytics models

Scenario 2: Global Retail Organization

- Real-time inventory and customer analytics

- Different clouds due to regional IT decisions Databricks provides a unified analytics backbone

Scenario 3: M&A-Driven Enterprise

- Multiple inherited data platforms

- Need for rapid integration Databricks acts as the unifying data layer

Best Practices for Implementing Databricks in Multi-Cloud Environments

- Define a global data governance framework early

- Standardize data models and naming conventions

- Use Unity Catalog as the governance foundation

- Adopt a federated, not centralized, architecture

- Monitor performance and cost continuously

- Invest in enablement and platform ownership

Conclusion: Databricks as the Foundation for Global Data & AI

Multi-cloud is no longer a future consideration—it is today’s reality. Enterprises that attempt to manage it with fragmented platforms and legacy architectures face rising costs, slower innovation, and governance risk.

Databricks offers a practical, scalable, and future-ready solution:

- One platform across clouds

- Consistent governance and security

- Support for analytics, AI, and GenAI

- Flexibility without lock-in

By enabling global data strategies with local compliance, Databricks empowers organizations to move faster, innovate responsibly, and compete at a global scale.

FAQ'S

1. What makes Databricks truly multi-cloud compared to other data platforms?

Databricks is built on an open Lakehouse architecture that runs natively on AWS, Azure, and Google Cloud. With open data formats, consistent APIs, and separation of compute and storage, organizations get the same data, analytics, and AI experience across clouds without managing multiple platforms.

2. Can Databricks support global data strategies while meeting regional compliance requirements?

Yes. Databricks enables federated data architectures where data stays in-region to meet residency and regulatory requirements, while governance policies, data models, and analytics standards are managed centrally for global consistency.

3. How does Databricks handle governance across multiple clouds and regions?

Databricks uses Unity Catalog to provide centralized metadata management, fine-grained access controls, lineage, and auditing across all workspaces. This allows enterprises to enforce consistent governance policies globally without slowing down teams.

4. How does Databricks enable secure data sharing across regions and clouds?

With Delta Sharing, Databricks allows organizations to share live data securely across clouds and regions without copying or moving data. Access can be controlled dynamically, making it ideal for global analytics, partner collaboration, and M&A scenarios.

5. Can Databricks support AI and machine learning workloads consistently across clouds?

Absolutely. Databricks provides unified ML workflows using MLflow, Feature Store, and Mosaic AI across AWS, Azure, and GCP. This ensures models can be trained, governed, and deployed close to data sources while maintaining consistency across regions.

Related Posts

How Detroit Manufacturing Companies Are Using Cloud Data Storage to Scale Operations

Cloud-powered data storage for modern manufacturing

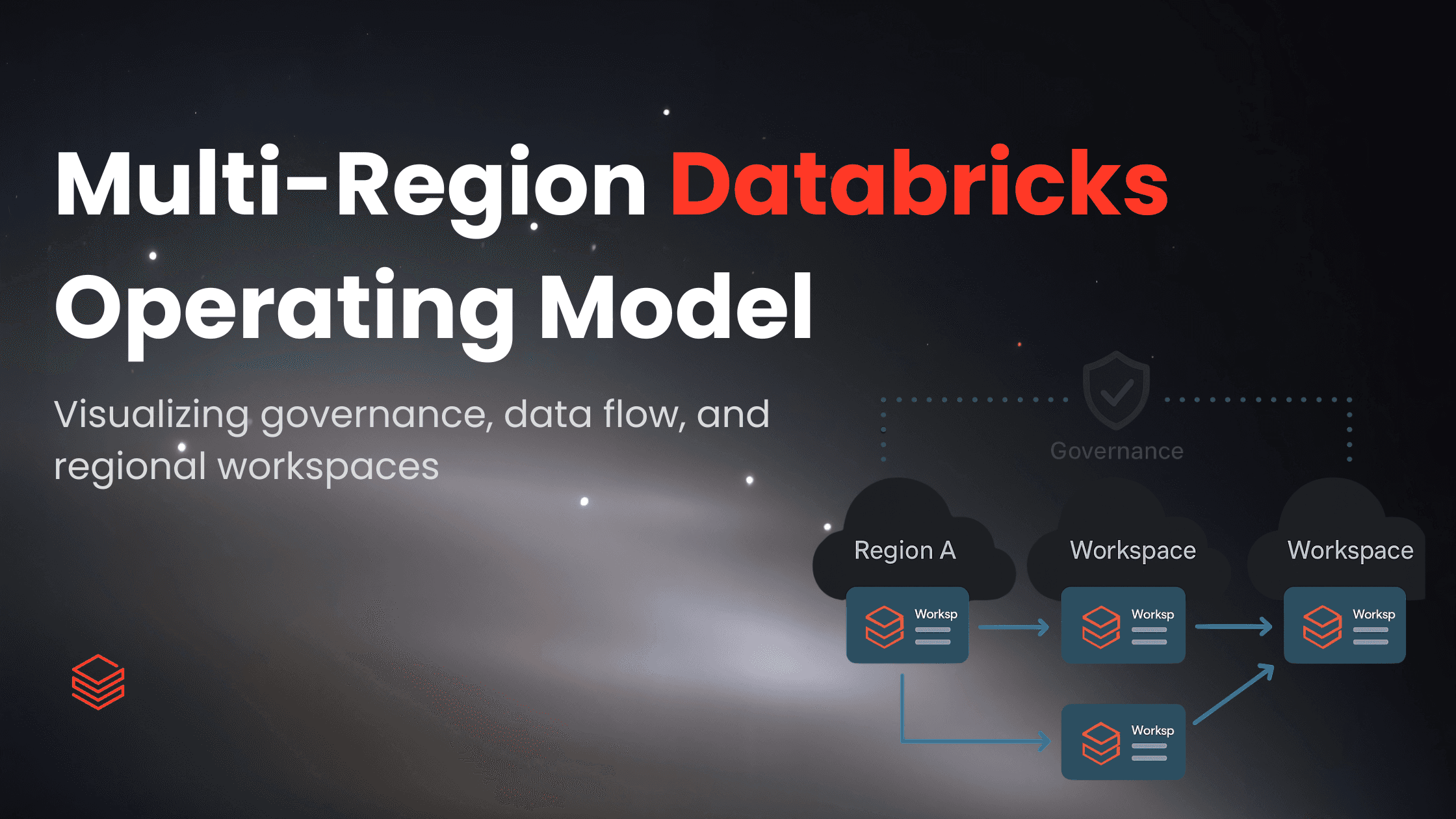

How to Plan Databricks Adoption for a Multi-Region Enterprise

Framework for multi-region Databricks adoption

From Data Lakehouse to Agent: How to Deploy AI Agents over Your Enterprise Data in Databricks

Detroit’s data leads smarter decisions across industries.